July 18, 2024

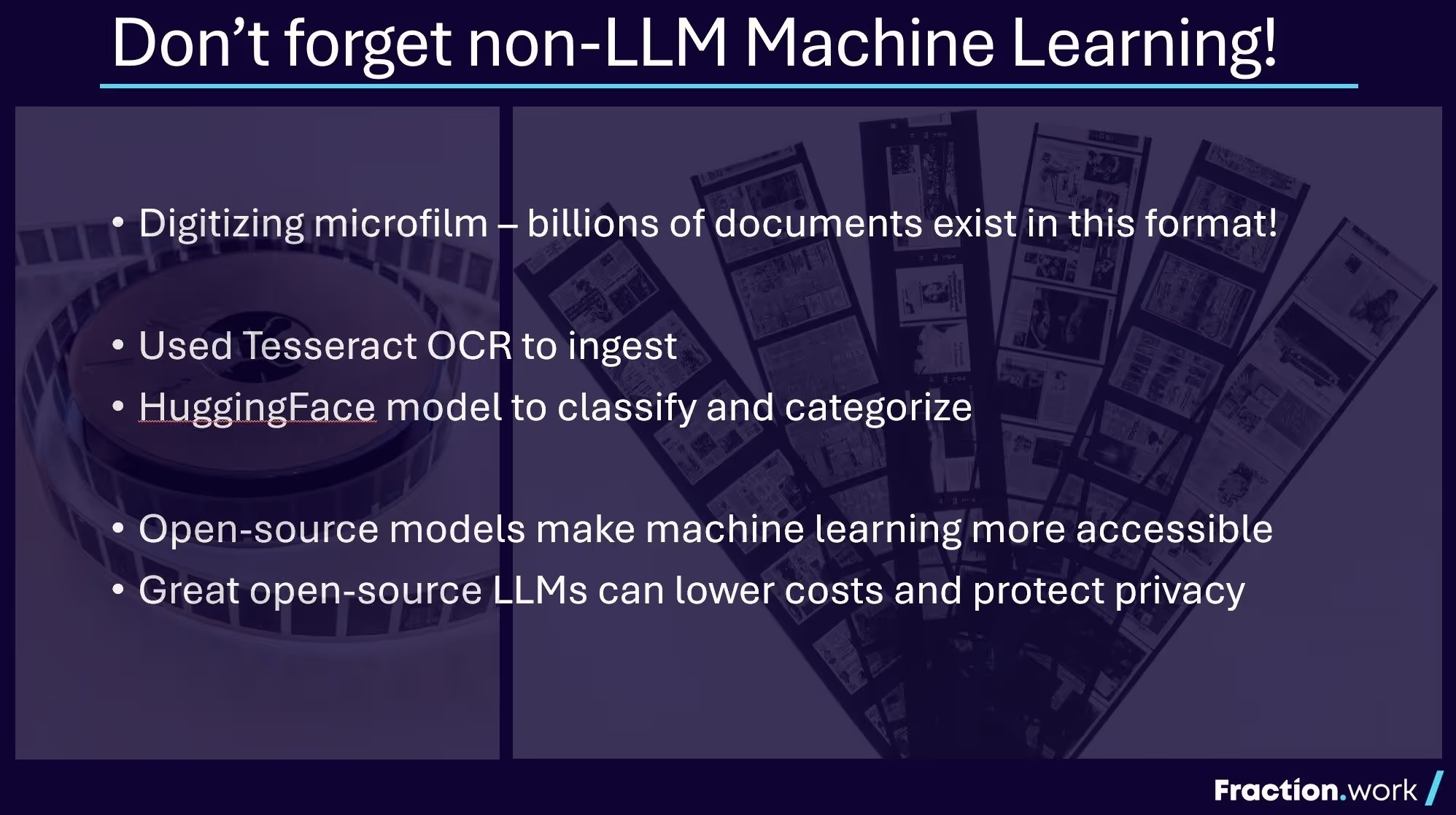

In the dynamic world of machine learning, the spotlight often shines brightest on the latest advancements in large language models (LLMs). Imagine a full stack engineer tasked with processing thousands of microfilm images; opting for a purpose-built non-LLM solution significantly enhances both accuracy and cost-efficiency.

The advantages are typically astounding.

With open-source OCR and classification models readily available, such tasks become not only manageable but also impressively effective, harnessing the full potential of modern machine learning.

The landscape of machine learning extends far beyond LLM-based solutions.

Non-LLM based machine learning encompasses a variety of techniques and algorithms. This realm includes traditional methods such as decision trees, random forests, support vector machines, and clustering algorithms. Additionally, neural networks specialized for specific tasks also fall under this umbrella.

These methods serve distinct purposes.

Instead of generalizing, they excel at targeted predictions and classifications, which is crucial for applications involving structured data and specific problem domains. By leveraging these techniques, full stack engineers can build robust and scalable solutions.

Approaching machine learning with non-LLM models allows for greater flexibility, leveraging tailored algorithms that are often more resource-efficient. Full stack engineers, through platforms like HuggingFace, have access to a wealth of pre-trained models and libraries. These resources foster innovation and reduce the barrier to entry, democratizing machine learning advancements across various industries.

Utilizing non-LLM based machine learning, full stack engineers gain a significant edge in crafting highly efficient, specialized solutions. They are empowered to integrate targeted ML models seamlessly within their tech stacks.

This integration minimizes inefficiencies and resource drain.

They can prioritize operational costs and performance parameters, leading to optimized deployments that meet both business and technical goals. The era where deep ML expertise was mandatory has evolved.

The ecosystem now supports full stack engineers who leverage accessible platforms and open-source tools. This enables them to deploy state-of-the-art models without extensive learning curves.

An additional perk is the cost-effectiveness of using purpose-built models over generalized LLMs, thus offering high accuracy at a fraction of the expense. Full stack engineers can stay ahead by adopting these advanced, yet simplified, methodologies.

Lastly, they are not limited by their existing team’s skill set. Resources like Fraction facilitate the identification and procurement of any necessary expertise, ensuring that full stack engineers can execute sophisticated machine learning projects successfully.

When exploring non-LLM based machine learning techniques, full stack engineers should consider convolutional neural networks (CNNs) for image recognition tasks.

For example, they might deploy a CNN model to categorize and analyze a vast collection of images, such as microfilm documents, with high precision and efficiency.

Additionally, support vector machines (SVMs) can excel in classification tasks, enabling accurate data categorization without the extensive computational overhead of LLMs.

In the realm of non-LLM based machine learning, OCR models stand as crucial tools. These models excel at converting various types of documents into machine-readable text, significantly enhancing data accessibility.

By implementing OCR models, full stack engineers can effectively process large volumes of textual data, such as historical microfilm, with high accuracy and efficiency. This targeted approach avoids the pitfalls of general LLMs, providing cost-effective solutions.

OCR models convert printed text into digital text with 98% accuracy or higher.

The accessibility of state-of-the-art OCR models via platforms like HuggingFace means that full stack engineers can quickly integrate these solutions into their projects. Even without specialized ML expertise, teams can deploy robust OCR and classification systems, optimizing workflows and improving data processing capabilities.

Classification models are essential for organizing data into meaningful categories, improving accessibility and operational efficiency.

Leveraging classification models can lead to significant cost savings and performance improvements. They offer targeted solutions over generalized models like LLMs.

For full stack engineers, integrating these models is now more accessible than ever, thanks to open-source platforms and comprehensive resources available online.

Deploying non-LLM based machine learning models requires meticulous planning and execution to ensure optimal performance, scalability, and cost-efficiency. It is essential to consider cloud, on-premises, and hybrid deployment options based on specific project requirements and constraints.

By leveraging containerization tools like Docker and orchestration platforms like Kubernetes, engineers can seamlessly deploy, manage, and scale models with minimal downtime and maximum flexibility.

Open source platforms have revolutionized machine learning.

These platforms provide full stack engineers with ready-to-use, high-quality models. They significantly reduce the time and effort needed to develop machine learning applications, allowing teams to deploy effective solutions rapidly. HuggingFace, for instance, offers a vast repository of open-source models that engineers can easily access and integrate into their systems.

The advantages are truly transformative.

Engineers no longer need to start from scratch – they can build upon existing foundations to innovate. Moreover, they can customize these pre-trained models to better meet their specific use cases, thus achieving superior results quickly and efficiently.

By leveraging these open-source platforms, full stack engineers can now harness the power of state-of-the-art machine learning technologies, pushing beyond traditional limits. These resources not only democratize access to advanced tools but also foster a collaborative environment where continuous improvement and innovation thrive.

In the realm of non-LLM based machine learning, tangible impacts are evident across various industries.

For instance, detecting anomalies in manufacturing processes using advanced image recognition greatly reduces operational costs and improves efficiency. Such capabilities showcase the adaptability and power of specialized models.

Applying purpose-built models for specific tasks often yields more precise results than general solutions.

A compelling example is the task of image ingestion.

One recent success story involved an ambitious project centered around large-scale image ingestion. The primary objective here was to handle a substantial volume of microfilm images using OCR and classification models. Once thought archaic, microfilm continues to store a treasure trove of historical and legal documents. Innovatively extracting and organizing this data is not only feasible but also transformative.

Specialized OCR models excelled here.

Instead of defaulting to general LLMs, which may incur high costs and lower accuracy, the team deployed purpose-built open-source OCR and classification models in the cloud. This intelligent approach considerably reduced expenses and significantly improved the output quality.

Their experience underscores a critical point: full stack engineers can spearhead impactful machine learning projects using non-LLM models. As of 2023, the technology landscape presents these professionals with unprecedented opportunities to employ advanced models, fostering innovation and driving meaningful advancements in their respective fields.

Non-LLM based machine learning presents compelling cost advantages, maximizing the efficiency of resource utilization.

For instance, deploying open-source OCR and classification models in the cloud significantly cuts down expenses compared to generalized LLM solutions. Full stack engineers can achieve higher accuracy with models tailored for specific tasks, ensuring optimal performance without incurring exorbitant costs.

Moreover, this approach offers a practical pathway to maintaining budget constraints without compromising the quality of the output. By leveraging specialized models, teams can produce detailed and precise results that surpass the capabilities of more costly, generalized systems.

Ultimately, adopting a strategic focus on non-LLM models empowers organizations to innovate without financial strain. It positions them to harness cutting-edge technologies, deploy them effectively, and drive substantial advancements in performance and cost-efficiency. This balanced approach aligns with the dynamic demands of modern projects, fostering a culture of continual growth and excellence.

Building a versatile and capable team.

Investing in training programs can transform your workforce. It's essential to identify areas where skill gaps exist and provide targeted education to address them. Moreover, leveraging online resources ensures continual learning and adaptability, equipping your team with the latest competencies to handle diverse ML tasks effectively.

Never underestimate the power of workshops.

These engaging sessions offer practical experience and foster collaboration - so your team not only gains knowledge but also strengthens their ability to work together. It’s a strategy that pays dividends, enhancing overall efficiency and innovation.

Incorporating project-based learning, combining theoretical instruction with real-world application, can shape your team into a robust, versatile unit. Undoubtedly, this comprehensive approach will equip them with the skills required to master non-LLM based machine learning strategies and technologies.

Subscribe to our webinars at hirefraction.com